dorkbot NYC – April 2006

April 5, 2006

The nine million and eighty seventh dorkbot-nyc meeting took place on Wednesday, April 5th at 7pm.

It featured the lovely and talented:

John Arroyo: Eingen Rhythm Software

Using machine learning statistical analysis a rhythmic synthesizer was created. It is a rhythm composer of sorts that is trained instead of user programmed. The end result is an intelligent groove box where interpolations of the seed rhythms are possible to generate in real-time. Each of the seed rhythms is automatically extracted and projected into a space, the user can then move around in this space and morph one rhythm into the next. More intelligent instruments are on the drawing board…moving towards a new paradigm in music software synthesis.

http://www.rhythmicresearch.comJeff Han: Multi-Touch Interaction Research

While touch sensing is commonplace for single points of contact, multi-touch systems enables a user to interact with a system with more than one finger at a time, allowing for the use of both hands along with chording gestures. These kinds of interactions hold tremendous potential for advances in efficiency, usability, and intuitiveness. Multi-touch systems are inherently also able to accommodate multiple users simultaneously, which is especially useful for collaborative scenarios such as interactive walls and tabletops. We’ve developed a new multi-touch sensing technique that’s unprecedented in precision and scalability, and I will be demonstrating some of our latest research on the new sorts of interaction techniques that are now possible.

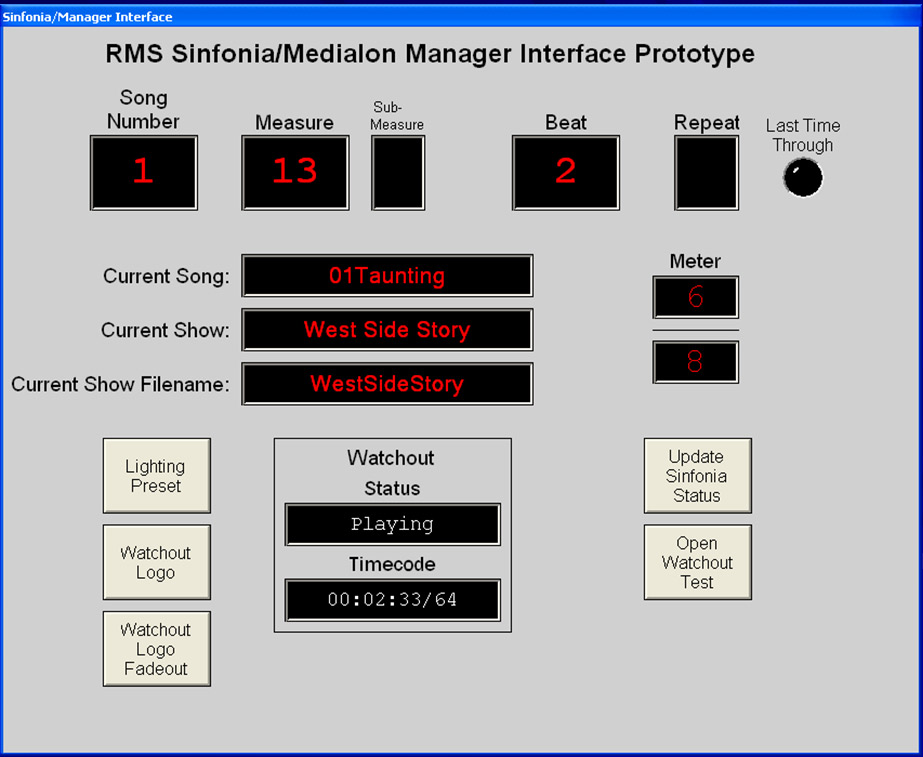

http://mrl.nyu.edu/~jhan/ftirtouchJohn Huntington: Synchronizing Live Performance with Musical Time

Modern entertainment and show control systems run in many different ways, but are often used in a linear mode, where all the elements of a show are locked to a fixed time base (and the time base is often linked to some linear media). For example, a prerecorded video might be played in a live show, and lighting and sound cues might then be programmed to trigger at precise times, down to the video frame. This approach is cost-effective and relatively easy to program, but, of course, the actors, dancers, musicians and other performers have to synchronize themselves to this pre-determined, rigid clock structure, and this severely limits the performance. Even with those limitations, however the majority of media-synchronized live shows today sacrifice flexibility in order to gain precision and control, and execute all lighting, video and other cues from a rigid clock. Professor John Huntington and Dr. David B. Smith, colleagues at NYC College of Technology’s Entertainment Technology department, believe that that the technology should track the performers, not the other way around, and this is the focus of our research into the use of Musical Time as a synchronization source. Music runs on “musical” or “metric” time, where the musician or conductor has total control over the tempo, down to a beat level. Unlike linear time, Musical Time can slow down or speed up, allowing the music to respond to the actions of singers and other performers.

http://www.zircondesigns.com

Some images from the meeting.